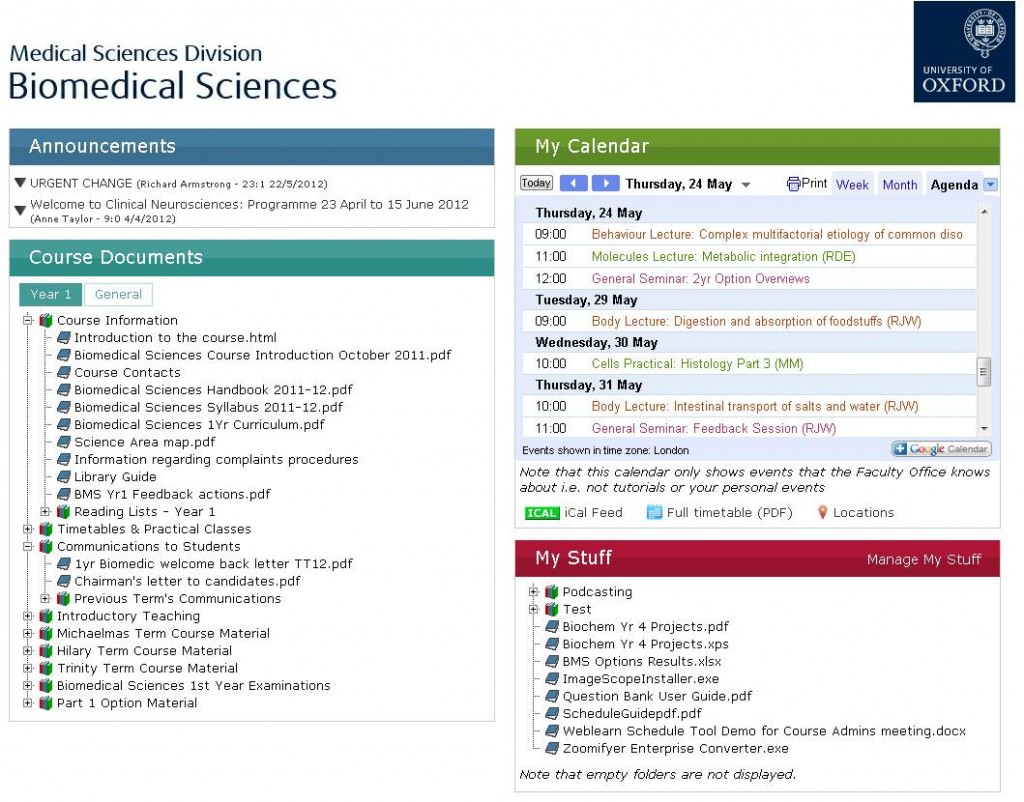

This year (2011-12), a new course, Biomedical Sciences, started within the Medical Sciences Division. This course combines teaching specific to the course with teaching shared with other courses. In response to this, we wanted to ensure that the students’ experience of the course in WebLearn (Oxford’s Sakai-based VLE) was coherent and personalised, and didn’t require them to search through different parts of WebLearn to find what they needed.

Therefore, we decided to create a portal page that makes it easy for students to access the information – timetables, documents, etc – relevant to them. We wanted the page, and all of the content, to remain in WebLearn, to ensure that managing the content and the users remained straightforward for lecturers and administrators accustomed to using WebLearn.

The resulting portal page, shown above, provided students with a slick, modern-looking page, on which they could see any recent announcements, view their timetable and access documents both relating to their course and from their personal site within WebLearn.

In order to achieve this, it was necessary to create a multi-level structure for the site, with the main site containing a subsite for each year of the course, and each year site containing a subsite for each module.

To dip quickly into the technical aspects, the portal page makes significant use of JavaScript, in particular the JQuery library. Where possible, the content, along with the user’s status and year-group, is gathered using Ajax requests to ‘WebLearn direct’ URLs, which return information, such as a user’s recent announcements, in a computer-friendly format, e.g. JSON. A brief summary of how the different sections of the page are created is given below:

Announcements

WebLearn’s direct methods are used to get a user’s announcements, specifying the number and age to show. These are then presented to the user in an ‘accordion’, where clicking on an announcement title expands further details of that announcement.

Calendar

The requirement for the calendar was to bring together multiple module calendars into a single view, with a different colour for each module. This was achieved as follows:

- The calendars for each module reside in the module sites.

- A Google account is subscribed to the calendar (ICS) feed provided by WebLearn for each module.

- A Google-calendar view of all the module calendars, with each one assigned a different colour, is embedded into the page.

- In order to combine the multiple feeds back into a single ICS feed that students could sign up to, e.g. on a smart phone, we used a tool called MashiCal. However, requires manual input of the feeds to be ‘mashed’ – this has not been a problem so far as the students all do the same module in Year 1.

Course Docs

Documents and resources are held in the subsites for each year/module, with some general resources in the top level site. At the time of creating the portal page, there were no direct methods for accessing resources, so a somewhat clunkier method was used. The portal page requests the web view (an HTML page) of the appropriate resources and then uses JQuery to dig down through the folder structure to extract the links to all of the resources and present them in a tree view.

My Stuff

This provides a view of everything in a student’s My Workspace resources folder, produced in the same way as the Course Docs. Students can only view their resources from the portal page – they have to actually go to their workspace to upload/edit resources.

Future Developments

- Access resources for Course Docs and My Stuff using direct methods (now available after a recent upgrade), as the current process of extracting links from HTML pages is slow and error-prone.

- Extending functionality of My Stuff, in particular enabling drag-and-drop upload of files, so students can quickly upload files from any computer, e.g. results in the lab.

- Creation of our own ‘calendar aggregator’, to automatically combine ICS feeds for each student based on the modules they are studying.